This implementation example is thought to give an idea of how to realize a shader-based approach for screen space ambient occlusion. Additionally it might give a a basic introduction to shader programming with GLSL. The vector based SSAO approach is used here.

Overview

As SSAO is done in a post processing step, multi pass rendering is the technique to be used here. The whole process is structured as follows:

- Geometry pass scene geometry is rendered, normals and linear depth are stored, per pixel lighting information is stored

- SSAO occlusion is calculated using afore stored data

- Blurring Gaussian bilateral blur is applied to occlusion values in two steps (separated filter)

- Display show rendered result in frame buffer

Within implementation a method doRendering() encapsulating this structure might look like this.

void SSAO::doRendering()

{

geometryPass();

//do SSAO Calculation

ssaoPass();

if(doBlur)

{

blurPassFirst();

blurPassSecond();

displayPass();

}

else //no blurring done, AO has to be shown

{

displayPass();

}

glutSwapBuffers();

}

Geometry pass

The first stage of our multi-pass-rendering approach has to render the scene geometry and store the following data for the later SSAO computation:

- Linear depth values of all rendered (visible) surface points in camera coordinate system

- Normals of rendered surface points

- Shaded color for every pixel (per vertex or per pixel for later manipulation of occlusion shadow)

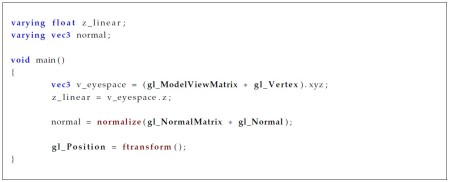

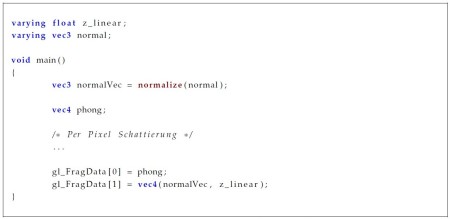

While the shading could be performed by the mere fixed OpenGL pipeline, we have to use a shader to allow the output of linear depth values and normals. Therefore the high level shading language GLSL is used. A possible implementation for this purpose is shown in the following listings for vertex- and fragment shader.

The vertex shader transforms the respective vertex (gl_Vertex) into camera coordinate system. The depth value z_eye is linearly interpolated given to the fragment shader (varying). The normal has to be transformed to camera coordinates as well and needs a re-normalization after transformation. The gl_NormalMatrix provided by GLSL has to be used instead of the gl_ModelViewMatrix. Otherwise wrong distortions on the normals might be the result. The reason is that normals are vectors and not affine points in 3d space. The gl_NormalMatrix is actually the transposed, inverse upper 3×3 part of the gl_ModelViewMatrix. The correctly transformed normals are linearly interpolated and given to the fragment shader

The fragment shader performs the storage of the interpolated variables received from the vertex shader. Two render targets are bound:(gl_FragData[0] , gl_FragData[1]). A per pixel shading routine could be implemented in this shader. Its result might be stored in the first render target while normals and linear depth are stored in the second one (the normal is a 3-component vector why the w-component of the RGBA-Render target can be used to store the depth value).