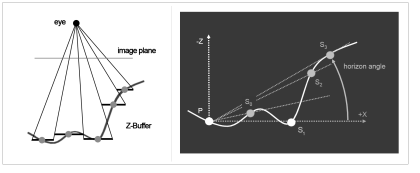

Screen Space Ambient Occlusion (SSAO) represents that class of algorithms, which compute occlusion in image space (screen space). In contrast to object space, screen space is the coordinate system given after projection, at the end of the pipeline where viewport transformation has already been done. Thus it is a 2D-coordinate system where x- and y-axis run over all pixels of the render target (visible window or offscreen target). It must be underlined that SSAO is always processed after the visibility test (Z-Buffer etc.) has been done and the actually visible pixels are determined. That makes the SSAO technique very efficient but also limits the occlusion to surface points that can be seen by the camera.

SSAO algorithms generally use information not given in screen space: linear depth values for every pixel and the respective surface normal are mostly reqcuired.

Crytek’s idea

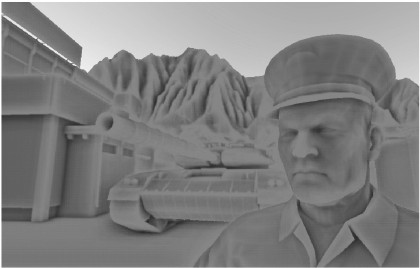

Game company Crytek first published a SSAO approach built into their game engine CryENGINE (R) 2. As this technique forms the basis for following methods it is explained in detail here. The publication by [Mit07] is very sparse regarding their SSAO approach. A detailed description can be found in „Shader X7: Advanced Rendering Techniques“ [Eng07].

Cryteks approch is based on computing the ratio between solid geometry and empty space in the vicinity of a surface point. This ratio is an approximate measure of occlusion. Therefor the neighbourhood of the surface point is sampled in object space. As the algorithm works in screen space, first the object space position (camera coordinate system) of the pixel of interest has to be computed. This is done by unprojection using an afore acquired linear Z-Buffer. This buffer is different to the standard Z-Buffer produced by the rendering pipeline which generally applies a non-linear scale to the depth values in camera coordinate system. Sample-points in object space are formed by adding offset vectors to the object space position of the current pixel. So what we have now, are just points in 3D sampling a nearly sphercial environment of the point of interest. These points can be located in empty space, on geometry surface or within solid geometry. In order to further examine their location we do a projection for every sample point back to screen space. The hence acquired 2D pixel-positions are used to do a lookup in our linear z-buffer. If the read depth value is farther from camera than the depth coordinate of the sample point in object space, the respective sample point is located in empty space. Closer depth values indicate a sample point enclosed in geometry. Doing this simple depth comparison for all sample points, the desired ratio between empty space and solid geometry is calculated.

Jittering and Blurring

Sampling processes are always very dependent on the underlying sampling pattern. For SSAO a constant pattern of offset vectors produce strong aliasing artefacts in the final occlusion image. These artefacts can be avoided by using a randomly altered sampling pattern. This technique is called Jittering. Crytek apply a random rotation to the constant pattern to gain individual offset vectors for every pixel in screen space. However Jittering always introduces high frequency noise to the image. So a post blur step has to be applied to the acquired occlusion image to smooth noise artefacts.

[Mit07] gives an update on that jittering technique. The random rotation is substituted by reflection of the sampling pattern on a random plane. AÂ plane is mathematically described by a normal and a point. As this plane is intended to reflect the offset-vectors it is always located at the object space position of out point of interest. So a random normal is sufficient to define the reflection plane. (Link: implementation details)

Evaluation

The drawback of this method is the ratio to approximate occlusion. Surfaces being fully unoccluded and exposed to the incoming light are shadowed by mistake: plane surfaces for example give a ratio of 0.5 (the spherical environment of such a surface point is just evenly tiled into empty space and geometry 1:1). Additionally it produces highlighting artefacts at edges, what is interpreted as a visual advantage by Crytek but it remains an artefact!

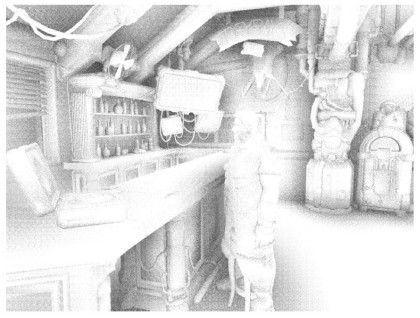

SSAO by Blizzard in Starcraft II

[Fil08] introduces a method very similar to the one used by Crytek. The sampling process using offset vectors and the jittering method are equal. Nevertheless they compute occlusion in a different way. Based on the same depth comparison, between sample point and depth read from linear z-buffer, they only consider points lying inside of geometry as occluders. The ratio used by Crytek is not computed and points in empty space are neglected as non-occluders. For every detected occluder they measure the amount of occlusion by considering the depth difference to the point of interest. Intuitively farther points occlude less than points being very close to the surface. A exponential function is used to model the attenuation with distance. They reader is encouraged to read the Starcraft Paper as it clearly describes the approach and particularly the sampling process using offset vectors.

This method uses an edge preserving blur filter to smooth the noisy occlusion. It is used very often in the field of SSAO.

A significant problem appearing in this approach is self-occlusion. It emerges if offset-vectors penetrate through the surface of the object itself and thus produce sample points being detected as occluders, what might be wrong particularly on plane surfaces. Actually unoccluded areas are shadowed due to wrong occlusion computation. They deal with this problem by flipping all offset vectors not pointing into the visible hemisphere of the point of interest.

Evaluation

This approach doesn’t produce wrong shadowing as occuring in Crytek’s technique. However the computed occlusion is quite noisy what shall be improved.

Shanmugam und Arikan [Sha07]

The following approach is clearly different to those introduced by Crytek and Starcraft. Shanmugam and Arikan present an approach separating high- and low-frequency computation of AO.

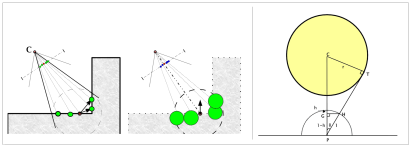

Their method is based on occlusion of a surface point caused by an occluding sphere. The background is the mathematical easy way to compute occlusion received by spheres. The sphere is always defined by position and radius and the measure of its occlusion is given by the projected area of the sphere seen from the respective surface point.

For high-frequency occlusion samples are created in screen space around the pixel of interest. Their object-space position is computed by un-projection using the linear Z-Buffer and a sphere is assumed at every given position to finally determine occlusion.

The low-frequency computation needs an approximation of of scene geometry using spherical proxys. For every pixel in screen space the occlusion by a respective proxy has to exceed a given threshold to be considered any further. As the proxies are spherical the mathematical basis for occlusion computation is the same as used for the high-frequency part. Finally the occlusion values for every pixel are summed among all considered proxies and the result is summed up with the high-frequency occlusion.

Evaluation

The visible results are quite well for this approach as the referenced raytraced pictures in their paper proves. The main problem is the effort of implementation and the bad performance due to the need for two separate computation parts. Particularly the approximation with spherical proxys is always dependent on scene geometry what is ineffective for complex scenes or mass data scenarios in particular. The approximation is computed on the CPU what is a further drawback.

Horizon Based Ambient Occlusion [Bav08]

This technique uses the notion that the amount of occluding geometry surrounding a surface point can be detected by searching for the horizon in the points vicinity. The again used linear Z-Buffer is interpreted as a height field and the horizon is defined by the avarage slope in this height field around the surface point. Therefore sample-directions around the pixel of interest are chosen in image space and sample points are created along those directions, yielding a circular sampling area around the pixel. The horizon-angle is found by some trigonometric computations. Intuitively heavily occluded surface points reveal a very steep horzion indicated by a big horizon angle.

Sampling is completely done in image space. Jittering is achieved by random rotation of the initial sampling directions. Noisy occlusion is blurred using a cross bilateral filter, which actually is an edge preserving gauss filter. Edges have to be considered because occlusion must not be blurred across different objects being located apart which is indicated by big depth differences in linear z-buffer.

Evaluation

The horizon based approach works very effective and yields a good performance feasible for realtime rendering. The overall visible quality is satisfying even for low sample counts of 36 samples and yields less noise than the afore presented approaches.

Approximating Dynamic Global Illumination in Image Space [Rit09]

As the name already says, this paper goes beyond ambient occlusion and tries to approximate global illumination efficiently in image space. As a part of it, AO is computed in screen space, why this approach shall be named here. However the method used here is very similar to the one introduced by [Fil08]. They sample in object space and do a projection for every sample point to gain the depth value stored for this projection ray in the linear z-buffer. Than an unprojection is done with the respective depth value and the position of the resulting point is compared to the actual sample point on this projection ray. They distinguish occluders from non-occluders by comparing their distance to the image plane while [Fil08] simply compared the depth value, what appears to be more effective. Finally only the samples not occluding the surface point are considered to compute a kind of indirect illumination by a single bounce.

Their paper further consideres the limitations of screen space methods by using multiple views or depth peeling.

As their approach does not provide an alternative method for AO computation no evaluation is given here.

Summary – pros and cons for Screen Space approaches

The pros:

- Computational effort independent from polygon count of scene

- Occlusion is calculated dynamically in realtime

- no preprocessing or precomputation

- easy integration into existing rendering pipelins

Several cons must be named:

- Computational effort dependent on screen (rendering) resolution

- Viewpoint dependency: hidden objects and geometry exceeding view frustum are not considered

- Occlusion is always noisy and has to be blurred

References

[Bav08] L. Bavoil, M. Sainz, and R. Dimitrov. Image-space horizon-based ambient occlusion. In SIGGRAPH ™08: ACM SIGGRAPH 2008 talks, pages 1-1, New York, NY, USA, 2008. ACM. ISBN 978-1-60558-343-3. URL http://developer.nvidia.com/object/siggraph-2008-HBAO.htm.

[Eng07] Engel, Wolfgang (editor) Shader X7: Advanced Rendering Techniques, Charles River Media, 2009.

[Fil08] D. Filion and R. McNaughton. Effects & techniques. In SIGGRAPH ™08: ACM SIGGRAPH 2008 classes, pages 133-164, New York, NY, USA, 2008. ACM. doi: http://doi.acm.org/10.1145/1404435.1404441.

[Mit07] M. Mittring. Finding next gen: Cryengine 2. In SIGGRAPH ™07: ACM SIGGRAPH 2007 courses, pages 97-121, New York, NY, USA, 2007. ACM. doi: http://doi.acm.org/10.1145/1281500.1281671.

[Rit09] Ritchel, T., Grosch, T., Seidel, H.P. Approximating Dynamic Global Illumination in Image Space, Proc. (ACM) Symposium on Interactive 3D Graphics and Games, 2009.

[Sha07] P. Shanmugam and O. Arikan. Hardware accelerated ambient occlusion techniques on gpus. In I3D ™07: Proceedings of the 2007 symposium on Interactive 3D graphics and games, pages 73-80, New York, NY, USA, 2007. ACM. ISBN 978-1- 59593-628-8.